Home

05. Google NotebookLM Infographics - tips and techniques

- Details

- Category: Google NotebookLM

- Hits: 1315

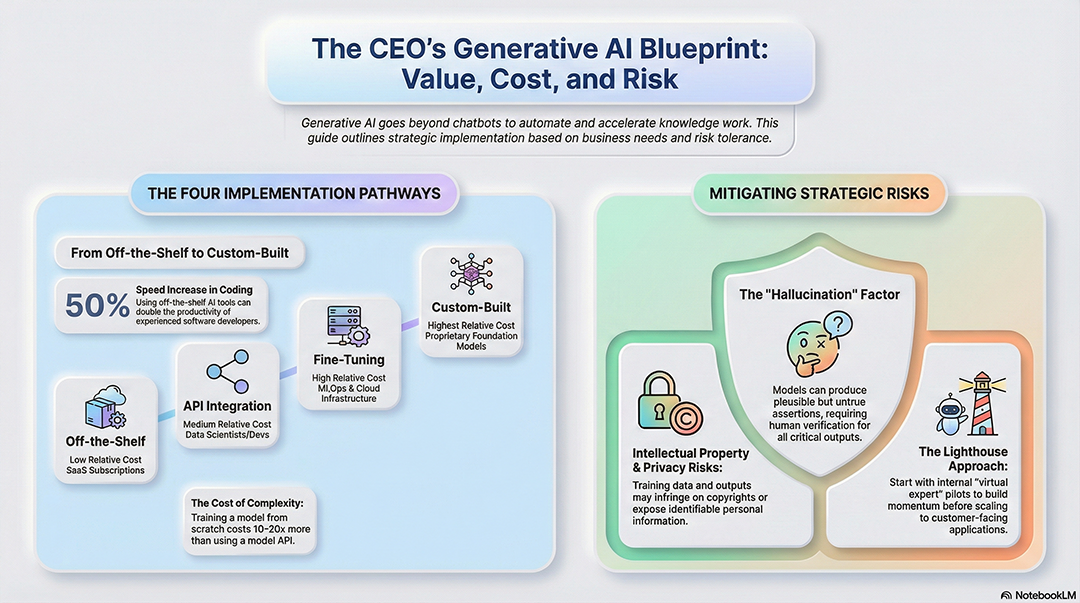

The "Infographic" tool in the Studio panel uses your uploaded sources (PDFs, URLs, YouTube transcripts) to generate structured visuals. To customize them, you click the pencil icon before hitting generate.

- The "Neumorphic Dashboard" (Modern/Tactile)

Neumorphism uses soft shadows and highlights to make flat elements look like they are "pushed out" from the background. It feels very high-tech, clean, and futuristic—great for visualizing software features or digital trends.

Style: Neumorphism.

Use soft 'extruded' cards and subtle drop shadows on a light grey background.

Read more: 05. Google NotebookLM Infographics - tips and techniques

Gemini Gems 101: Step-by-Step Guide to Creating your First Gem

- Details

- Category: Google Gemini

- Hits: 9077

What Are Gemini Gems?

Gemini Gems are like custom-made AI assistants that you create within Google Gemini. They're designed to be highly specialized for a particular task, saving you time and giving you more consistent results than if you were to write a long, detailed prompt from scratch every time.

Think of it this way: instead of repeatedly telling a general-purpose AI, "I need you to act as a marketing expert, with a professional yet friendly tone, and give me three social media post ideas for a new product launch," you just create a "Social Media Guru" Gem once. Then, every time you need social media help, you simply open that Gem and give it the quick instruction, like "Give me posts for the new product launch." The Gem already knows its persona, its tone, and its purpose.

Read more: Gemini Gems 101: Step-by-Step Guide to Creating your First Gem

Napkin AI - Generating professional graphics for your PowerPoint slides

- Details

- Category: Useful AI Tools

- Hits: 2703

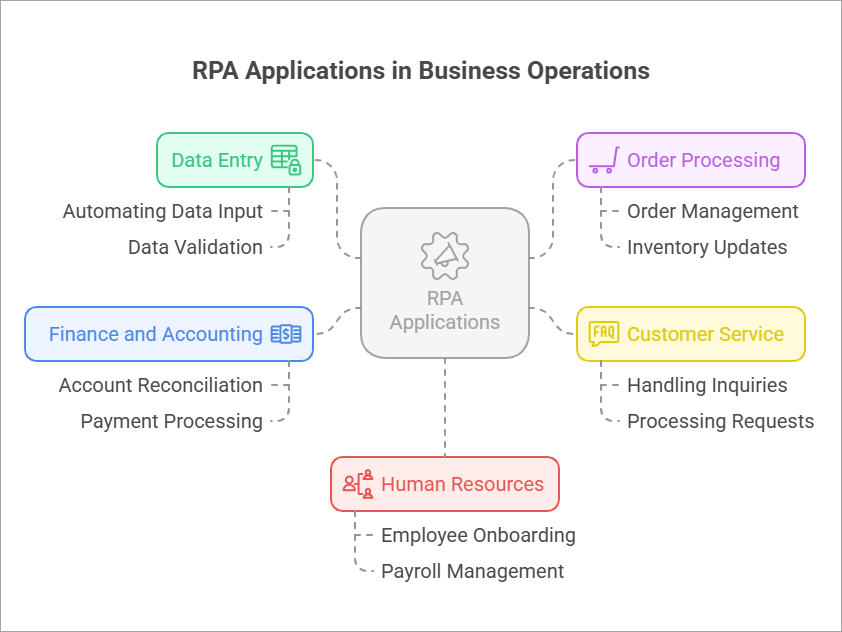

Need some professional McKinsey-style graphics for your PowerPoint slides?

After trying out so many different AI tools, I find Napkin AI is the best! Easy-to-use and the graphics produced is really professional! And the best part – it’s entirely free! (for now)

Website: https://app.napkin.ai/

Highlights:

- What makes Napkin unique is its prompt-free approach—simply paste your text and click to generate relevant visuals instantly.

- Provides many different variations of infographics for you to choose from

- Supports 60+ languages

- Exports to multiple formats (PPT, PNG, PDF, SVG)

Read more: Napkin AI - Generating professional graphics for your PowerPoint slides

ChatGPT Study Mode: A Smarter Way to Learn and Retain Knowledge

- Details

- Category: ChatGPT

- Hits: 1953

What is Study Mode

The new ChatGPT Study Mode is designed to make learning feel more like having a personal tutor by your side. Instead of just giving you the answer, it walks you through topics step by step using Socratic questioning - asking questions, giving quizzes, and offering feedback along the way. This keeps you actively involved, helps you really understand the material, and builds critical thinking skills. Whether you’re doing homework, studying for an exam, or trying to wrap your head around a tricky concept, Study Mode adjusts to your pace and keeps things engaging - like having your smartest, most patient tutor who’s available anytime you need.

The Study Mode is available to all logged-in users - including Free, Plus, Pro, and Team plans.

Read more: ChatGPT Study Mode: A Smarter Way to Learn and Retain Knowledge

From Bedtime Stories to Boardrooms: The Power of Google Gemini Storybook

- Details

- Category: Google Gemini

- Hits: 14142

When you first hear "Gemini Storybook," your mind probably jumps to fairy tales and adventures for kids. And you'd be right! This incredible new tool from Google is a game-changer for parents who want to create personalized, illustrated stories for their children. But here's a secret your colleagues might have already discovered: its power isn't limited to the kids' room.

What is it, and what makes it different?

Google Gemini Storybook is an AI-powered tool that transforms your ideas into beautifully illustrated, multi-page storybooks. Built into the Gemini app, it seamlessly merges creative text and stunning visuals. What makes it stand out is its ability to create a cohesive, book-like experience, not just a random string of images and words. While other AI tools can generate one or the other, Gemini Storybook brings it all together in a polished, easy-to-use format.

But here’s the key: the "storybook" format is just a powerful way to present information in an engaging, narrative style. This makes it perfect for more than just children's tales. You can use it to create compelling content for your professional life, too.

Read more: From Bedtime Stories to Boardrooms: The Power of Google Gemini Storybook

Gemini 2.5 Flash Image (aka Nano Banana) - the New King of AI Image Editing

- Details

- Category: Google Gemini

- Hits: 1966

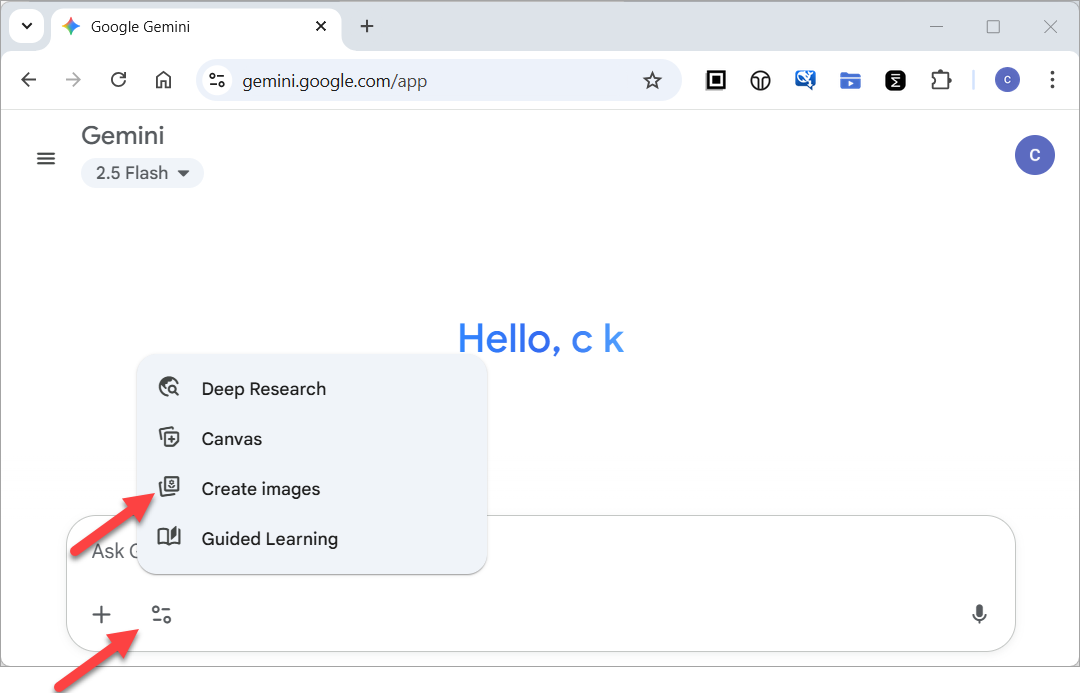

Gemini 2.5 Flash Image - the nano banana!

Google recently unveiled Gemini 2.5 Flash Image (internally nicknamed "nano banana"), a groundbreaking AI model that's quickly becoming the new benchmark in image generation and editing. It stands out for its ability to blend multiple images seamlessly, maintain perfect character consistency across different scenes, and make precise edits using simple natural language commands. This isn't just about creating pretty pictures; it's a powerful tool for marketers, designers, and creators, offering professional-grade results with incredible ease and speed. Many already consider it the best image model available today . Full details here

Accessing Nano Banana

- Go to: https://gemini.google.com/

- Click the Tools button, and select "Create images"

How to generate an image prompt from a photo so that you can create a similar image?

- Details

- Category: AI Tips & Techniques

- Hits: 2395

Problem

We have now access to many good image generators, including:

- ChatGPT image generation

- Qwen image generation

- The latest Gemini 2.5 Flash Image (aka "nano-banana")

How to generate an image prompt from a photo so that you can create a similar image?

Read more: How to generate an image prompt from a photo so that you can create a similar image?

So many LLMs and Models! Which one should I use?

- Details

- Category: AI Tips & Techniques

- Hits: 2380

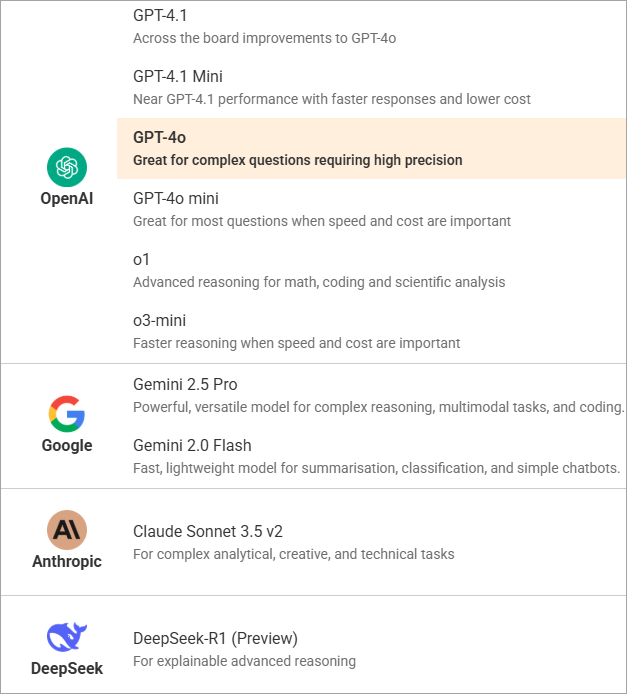

Problem

Below are some of the most popular LLM models:

One of the biggest challenge faced by people now is: which one should I use?

Chat Model vs Reasoning Model

First of all, it's important to understand the key differences between Chat Models and Reasoning Models: