Thanks for your patience. This document is complete. Please give the following a try and let me know if it works, ok!

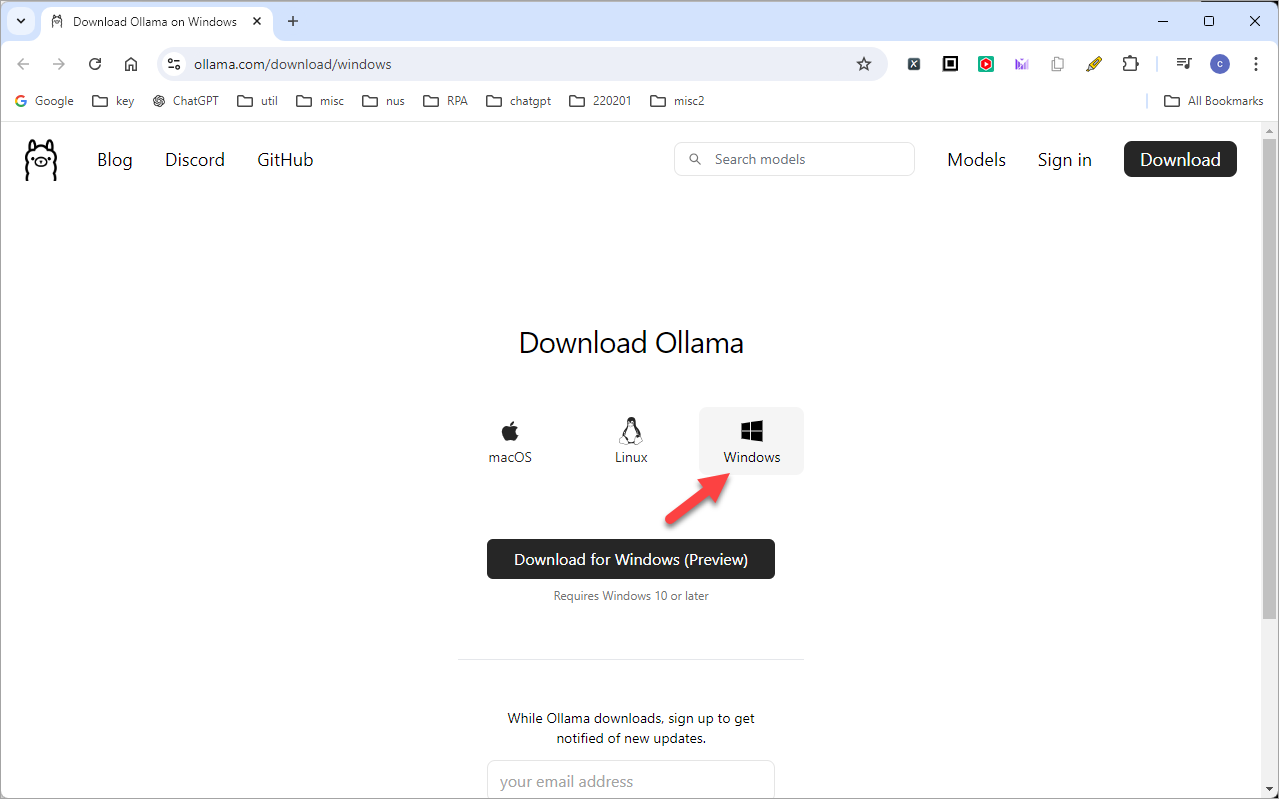

Download and Install

- Go to: https://ollama.com/download

- Ollama now supports 3 platforms - MacOS, Linux and Windows. Click the one for your platform.

- Open and run the downloaded installer file.

- When the installation completes, the installation window simply disappears. There will be no message on the screen to indicate the installation is successful.

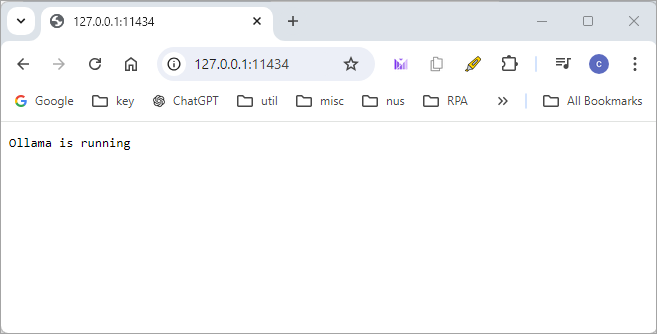

- To test if your ollama setup is ok, open a browser and type in the following:

http://127.0.0.1:11434/.

If you see the following message, it means your ollama has been setup successfully!

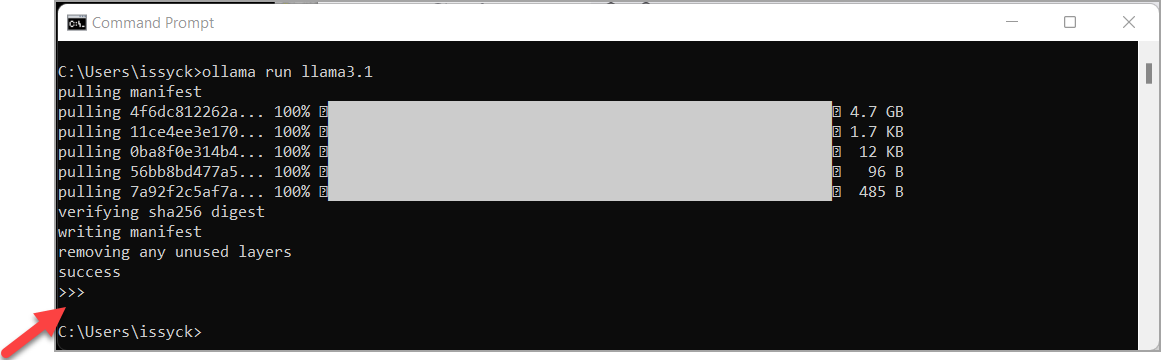

Download LLM on Windows

- To use Ollama, you need to download at least one LLM.

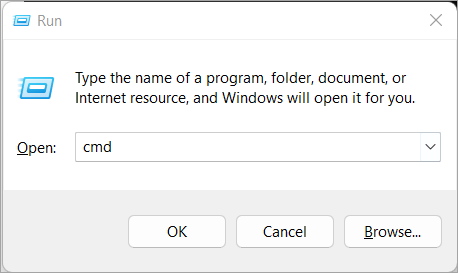

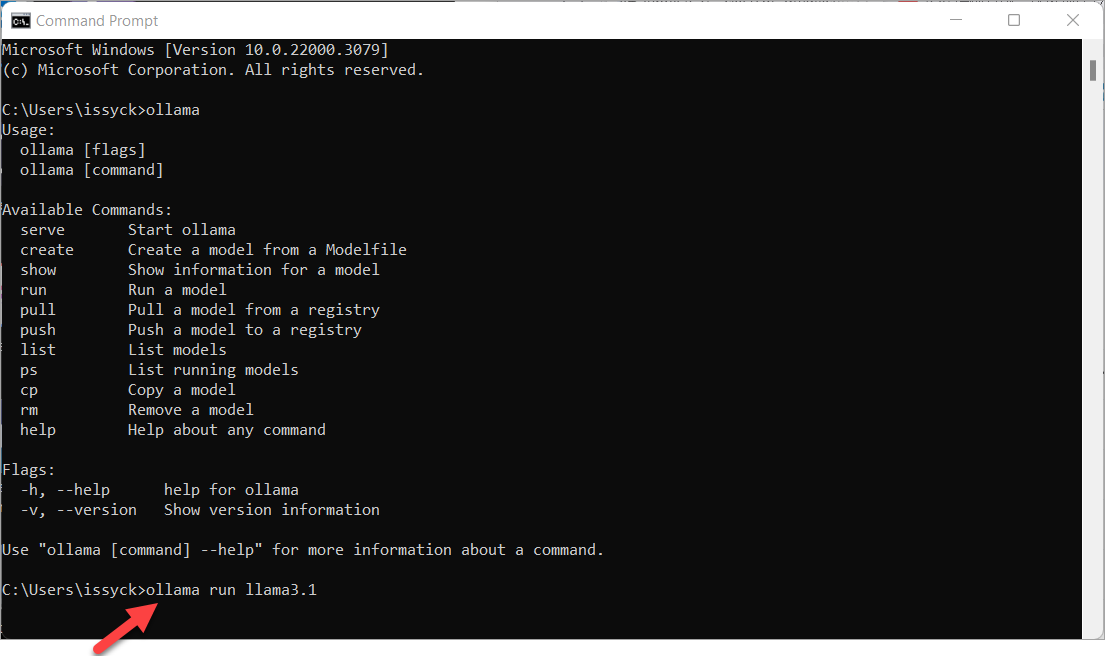

- On Windows, type Win+R and then "cmd": If you see the following message, it means your ollama has been setup successfully!

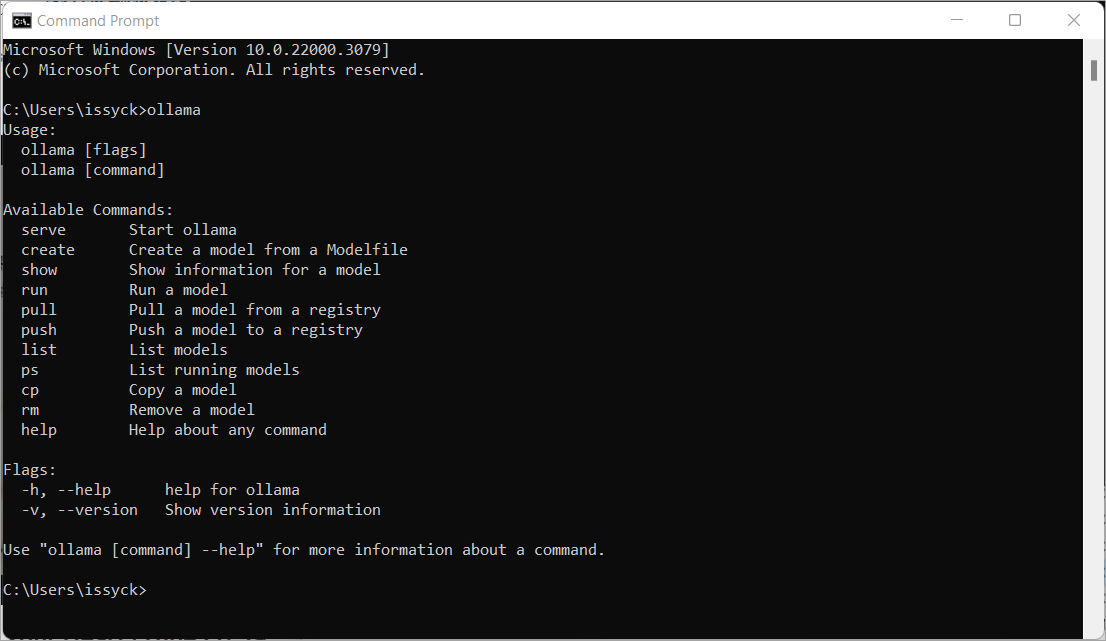

- In the command prompt, type:

ollama

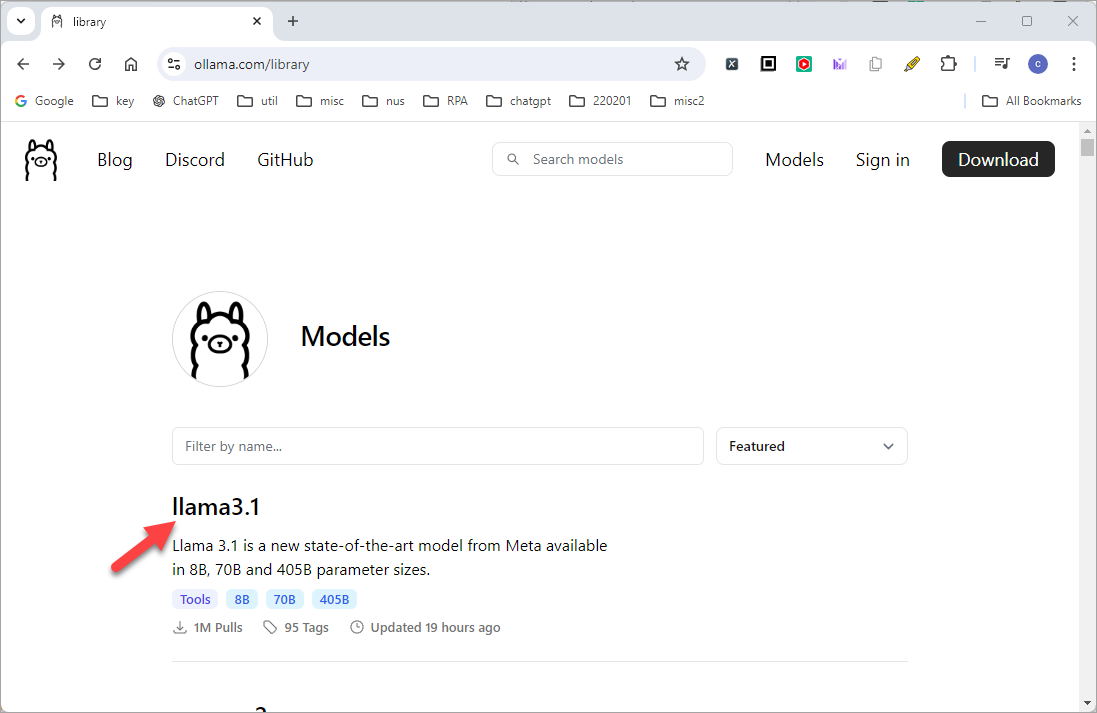

You will see the following: - Go to: https://ollama.com/library

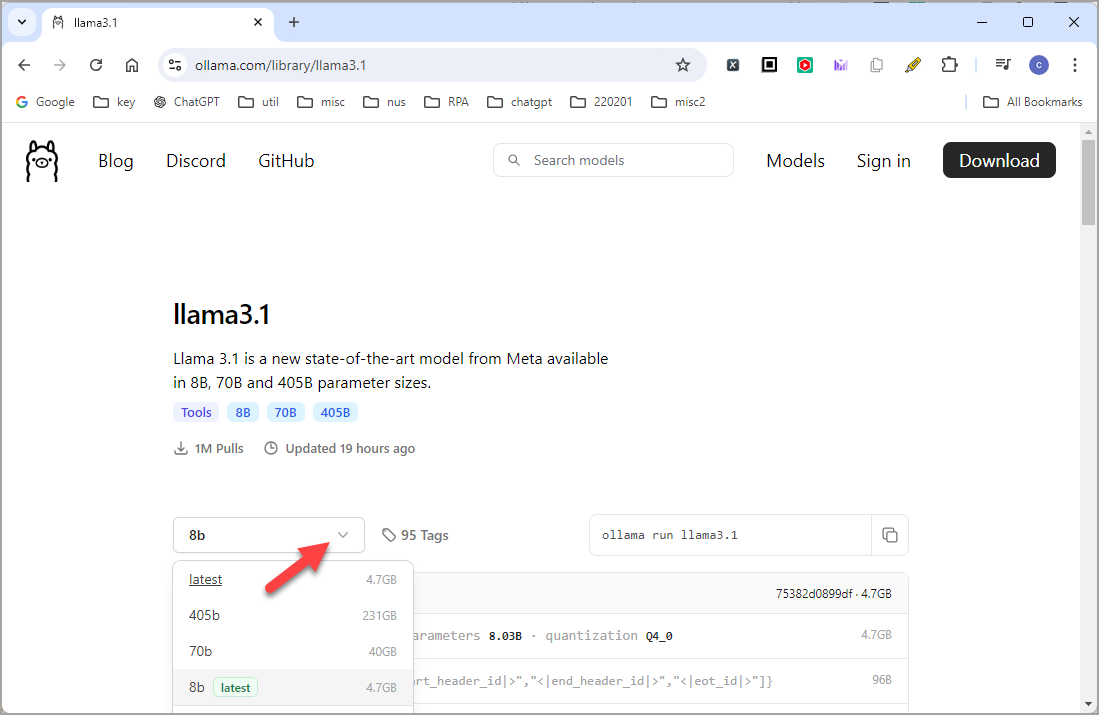

This page lists all the open source LLM models by Meta. Click the latest one (as of the time of writing) "llama3.1" - Click the pulldown menu. Llama 3.1 is available in 8B, 70B and 405B parameter sizes. Take note of the size for each model. Make sure you harddisk has enough space for the LLM model. For 8b, it requires at least 4.7GB,

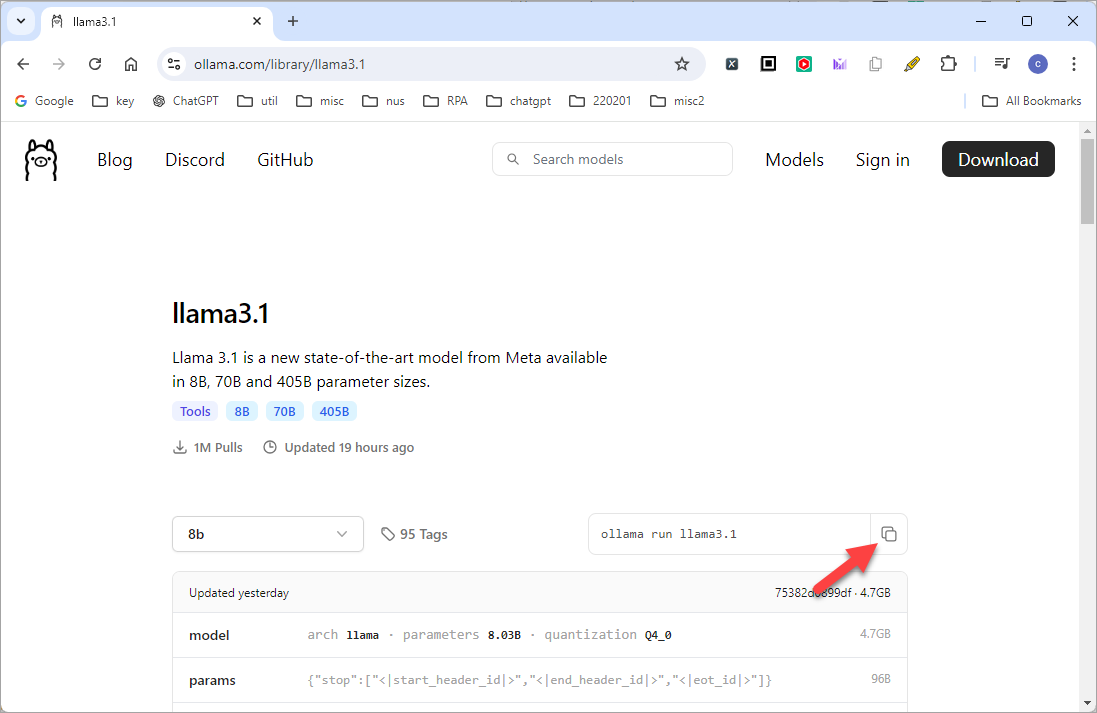

- Let's say your select the 8b model. Now click the "Copy" icon on the right.

- Go back to the command prompt and paste the command with

Ctrl-V - The model will now be downloaded to your local machine. This will take some time. So grab a cup of coffee...

When the model is downloaded, you will see a "success", and the prompt>>> - To test this, you can try any prompt, such as the following. Note that by default, ollama runs from command prompt. In the next section, I will show you how to set up a web interface that allows you to run ollama from browser.

- Similar to python, you press

Ctrl-Zto come out of Ollama back to command prompt.

Please help to write a python code to display all the files in the folder d:\test1

Note that most laptops do not have a proper graphics card. So it might run relatively slower compared to ChatGPT.

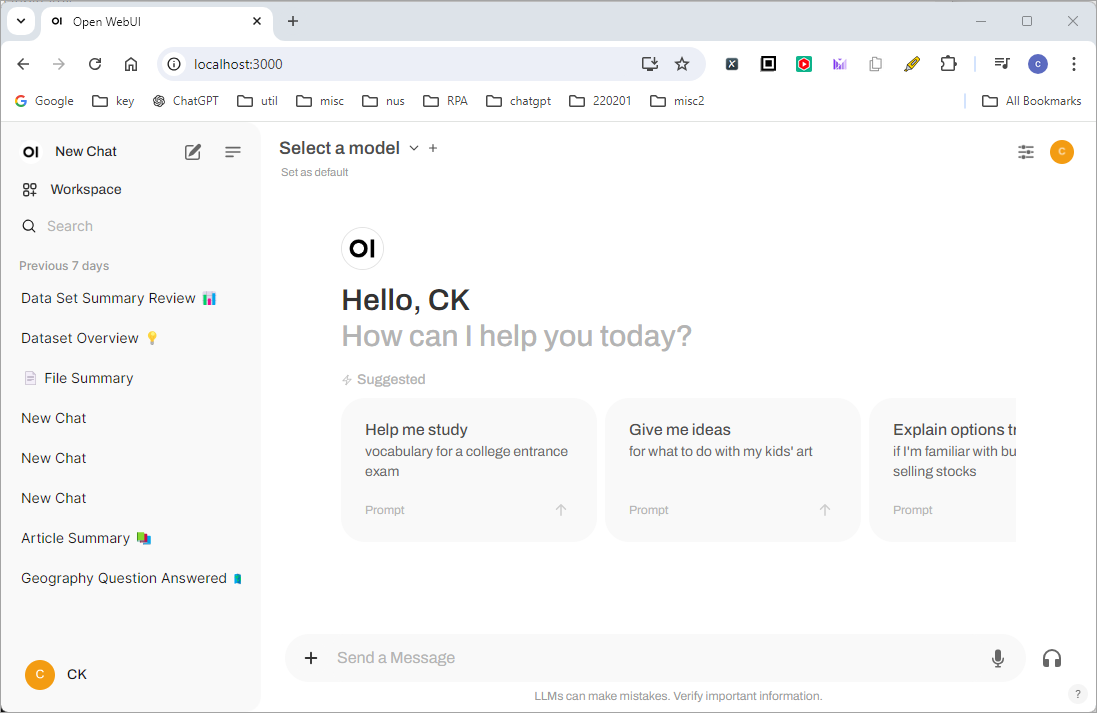

Access Ollama from browser

- By default, Ollama runs from command prompt. To run Ollama from browser, go to https://docs.openwebui.com/. Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

- The page details many different ways of setting up WebUI. The easiest way is by using Docker. Docker is an open-source platform designed to automate the deployment, scaling, and management of applications using containerization. Containers are lightweight, portable, and self-sufficient units that include everything needed to run a piece of software, such as the code, runtime, libraries, and system dependencies.

- If you have Docker Desktop installed on your Windows, please skip to Step 5.

- If you do not have installed on your Windows, download the installer from Docker Desktop Homepage. It currently supports Windows, Mac and Linux. Run the installer and follow the instructions on the screen.

- Once you have Docker Desktop installed and running, open a command prompt and run the following:

- When the installation completes, you will be able to run Ollama from browser using:

http://localhost:3000/ - You should now see a ChatGPT-like page running in your browser using the Ollama you have setup above. You can test it with any prompt. Note that it also supports PDF, csv and Excel file. Just drag and drop the file into the browser and start prompting.

- Enjoy your local version of ChatGPT!!!

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Add comment